On September 20th, 2023, the UK’s Online Safety Act passed its final parliamentary hurdle and is set to become law. The Act will impose unprecedented obligations on the trust and safety policies and operations of relevant online services operating in the UK.

By-and-large, these obligations can be divided between two parts: (1) new substantive duties to act against illegal and harmful content, and (2) somewhat juxtaposing duties to protect freedom of expression and allow for complaints against content moderation actions.

Regulated online service providers will need to respond quickly and efficiently. Duties relating to illegal content can be expected to come into effect almost immediately, whilst duties relating to child safety and other additional duties facing the largest services will come into effect over a longer timeframe of around 6-12 months.

Who will the Online Safety Act apply to?

The OSB will apply primarily to online services that have “links with the United Kingdom”.

To fall into scope, online services must either:

- Allow users to generate or upload content to their platform, and allow other users to encounter that content (“user-to-user online services”), or

- Be, or include, a search engine (“search services”).

The Act defines “links with the United Kingdom” broadly, to include any services that:

- Have a significant number of users in the UK, or

- Have the UK as a target market, or

- Are capable of being used by individuals in the UK and pose a material risk of significant harm to such individuals through content or search results present on the service.

How will the Online Safety Act affect your business?

Most immediately, regulated online service providers will need to ensure that they have well-designed and easy-to-use notice-and-action and complaint-handling mechanisms in place. They will also need to conduct an illegal content risk assessment and keep a detailed record. The next step will be to manage and mitigate harm from the risks identified, while balancing duties to protect freedom of expression and privacy in any measures taken. For Category 1 services, duties to protect journalistic, news publisher and democratic content must also be factored into this balancing exercise.

As another first port of call, service providers will need to conduct children’s access assessments to determine whether the child-safety related obligations will apply to them. This might involve a decision around implementing mechanisms that can effectively prohibit children from accessing their platform, or otherwise, preparing to comply with new obligations regarding child safety. If access is identified, the company will be required to deliver children’s risk assessments, manage and mitigate the identified risks, and implement a user reporting mechanism for content that is harmful to children, among other duties.

Beyond these immediate priorities, some companies will also face additional duties if they are categorised into one of the following groups by OFCOM. The main categorisations are:

Category 1: user-to-user services with additional obligations.

Category 2A: search services with additional obligations.

The specific thresholds for the categories will be set out in secondary legislation and will depend on the size of the platform (in users), its functionalities, and the resulting potential risk of harm.

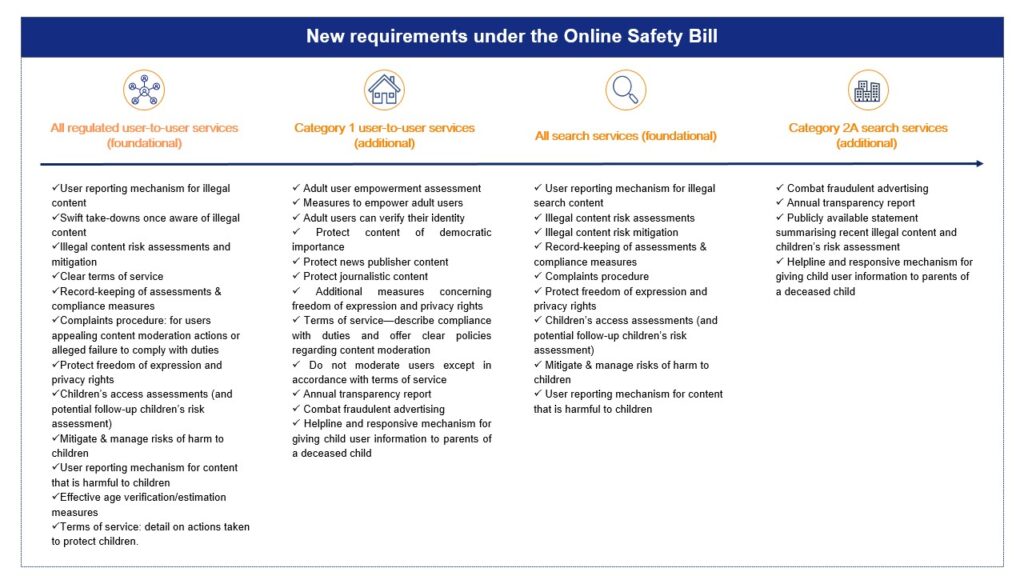

The following table summarises the main duties that will apply to the providers of relevant online services:

Obligations for different categories from the Online Safety Act

Who will enforce the Online Safety Act?

OFCOM will be charged with supervising the regulated services and enforcing their new obligations. The regulatory body will have the power to open investigations, carry out inspections, penalise infringements, impose fines, as well as request the temporary restriction of the service in case of a continued or serious offence.

Failure to comply with obligations can result in fines of £18 million or 10% of qualifying worldwide revenue, whichever is higher. Furthermore, criminal proceedings can be brought against named senior managers of offending companies that fail to comply with information notices issued by OFCOM.

When will the new rules apply?

While the Act will be technically enforceable within days, OFCOM will gradually interpret and announce the practical operational actions that should be taken by providers to achieve compliance. The most immediately enforceable duties will be those relating to illegal content, with OFCOM set to publish draft codes of practice this month. Child safety duties will come into force over a longer time frame, with OFCOM planning consultations for draft codes of practice after six months. The additional duties applying to the largest services will also be slower to come into effect as they will depend on OFCOM categorising services as a stated third priority after conducting consultations on codes of conduct for child safety.

How will the OSB interact with the EU’s Digital Services Act?

Much like the DSA, an unprecedented and expansive volume of online service providers will fall into scope due to its extra-territoriality. Both the OSB and DSA apply to relevant service providers with broadly defined “links” to the UK regardless of whether they host data or have a physical organisational presence.

In another commonality, both the OSB and DSA impose a tiered structure of increasingly stringent obligations depending on the size and risk profile of online platforms and service providers. However, companies will need to pay attention to the different division of obligations between platform and service provider categories, as the OSB applies some obligations universally where the DSA does not, and vice-versa.

An important difference in the division of obligations relates to illegal content risk assessments, which will be required of all regulated service providers under the OSB but is only required of Very Large Online Platforms (VLOPs) and Very Large Online Search Engines (VLOSEs) by the DSA. At a finer level, the subject matter of such risk assessments also differs between the two regulations, with the OSB focusing on illegal content and the DSA focusing on the design, functioning and use of platforms. Companies will need to carefully consider these different foci in drafting their relevant assessments.

The OSB and DSA also differ in their treatment of illegal content. The OSB attaches different obligations to different types of illegal content, whereas the DSA treats all illegal content equally. For example, proactive steps will only need to be taken by providers regarding priority illegal content under the OSB, with duties applying to other illegal content only once services are notified of its presence. Moreover, under the OSB, online services will face duties to assess, manage and mitigate harm not only from illegal terrorist and child abuse content, but also content that is harmful to children (while not illegal). By comparison, the DSA applies solely to illegal content.

Navigating the combined effect of these new legal requirements presents a complex challenge for relevant service providers.

How can Tremau help you?

Tremau offers a unified Trust & Safety content moderation platform that prioritizes compliance as a service. We integrate workflow automation and AI tools, helping online service providers meet the U.K.’s Online Safety Act requirements while boosting trust & safety metrics and reducing administrative burden.

Not sure how the Act applies to you or how it interacts with your obligations under the DSA?

Tremau’s expert advisory team can help you assess your obligations and prepare an operational roadmap to compliance.

To find out more, contact us at info@tremau.com.